Customers come to us asking how we compare against competitors, so we asked ChatGPT to write an objective comparison of LLM Pulse (that’s us) and GEO Metrics (formerly LLMO Metrics), another leading Spanish-created AI visibility tracking SaaS. Below is the output we received, reviewed for factual accuracy and updated with clarifications where public claims are ambiguous.

We welcome the GEO Metrics (formerly LLMO Metrics) team to provide any further feedback to clarify any claims made by the AI. This post has the sole intention to help users make a more accurate decision 😀

Table of Contents

We aim to do a regular update of the content in this post as both solutions improve their offerings.

Latest review: 23rd of January, 2026.

TL;DR

- Coverage: GEO Metrics lists coverage for ChatGPT, Gemini, Claude, Copilot, Perplexity, DeepSeek, Grok, Google AI Overviews, and now Google AI Mode. LLM Pulse covers ChatGPT, Perplexity Search, Google AI Mode, Google AI Overviews, and Gemini on all plans. Claude, Meta AI, Grok, and DeepSeek are available on Enterprise plans.

- Cadence: GEO Metrics uses daily visibility reports. LLM Pulse uses weekly by default (daily available on request). In practice, daily data rarely adds meaningful signal over weekly for brand-visibility use cases—AI answers don’t change that dramatically day-to-day.

- “Prompt search volume”: No major LLM vendor publishes per-prompt query counts. Any such numbers are modeled estimates, not ground truth. GEO Metrics uses traditional search volumes as a proxy. LLM Pulse prioritizes verifiable measurements—we don’t invent demand via statistical guesswork.

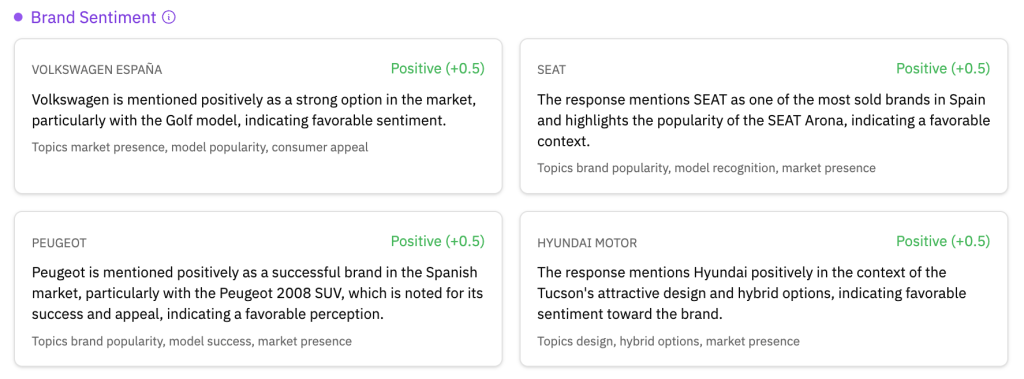

- Brand sentiment: LLM Pulse’s Brand Sentiment is live and provides consistent, explainable sentiment across tracked prompts and models.

- Pricing: At similar prompt counts, LLM Pulse comes in significantly lower on price. GEO Metrics offers broader LLM coverage (more models on base plans) and AI ranking suggestions.

What each platform tracks

LLM Pulse

- LLMs covered (all plans): ChatGPT, Perplexity Search, Google AI Overviews, Google AI Mode, and Gemini.

- Enterprise adds: Claude, Meta AI, Grok, DeepSeek, and more.

- Core features: Prompt tracking, citations analysis (see which sources drive answers), visibility scoring, competitor benchmarking, prompt suggestions, tagging, Brand Sentiment (live), Query fan out, Content Intelligence, Looker Studio connector, API access, and Chrome extension.

- Cadence: Weekly by default (daily available on request). Clear response caps per model for predictable usage.

GEO Metrics (formerly LLMO Metrics)

- LLMs covered: ChatGPT, Gemini, Claude, Copilot, Perplexity, DeepSeek, Grok, Google AI Overviews, and Google AI Mode.

- Core features: Answer/brand-mention accuracy, benchmarking, AI-powered recommendations to improve rankings, Prompt (Traditional) Search Volume view, and daily visibility reports.

- Cadence: Daily visibility reports.

Daily vs. weekly tracking: what matters in practice

For AI visibility, day-to-day movement is typically modest—especially in Google AI Mode, where third-party studies indicate higher stability versus AI Overviews. Weekly cadence tends to strike the best signal-to-noise balance for strategic decisions, while avoiding the operational overhead (and analysis churn) of reviewing mostly unchanged daily snapshots.

Bottom line: If you’re optimizing messaging, content, and citations, weekly trends are usually the right granularity. Daily can be useful for special campaigns or incident monitoring, but it’s rarely decisive for ongoing brand visibility programs.

AI model coverage comparison

| AI model | LLM Pulse (all plans) | GEO Metrics (all plans) |

|---|---|---|

| ChatGPT | ✅ | ✅ |

| Perplexity | ✅ | ✅ |

| Google AI Mode | ✅ | ✅ |

| Google AI Overviews | ✅ | ✅ |

| Gemini | ✅ | ✅ |

| Claude | Enterprise | ✅ |

| Copilot | Enterprise | ✅ |

| DeepSeek | Enterprise | ✅ |

| Grok | Enterprise | ✅ |

| Meta AI | Enterprise | — |

Summary: GEO Metrics offers broader LLM coverage on base plans (7+ models). LLM Pulse focuses on the 5 most-used AI search platforms (ChatGPT, Perplexity, AI Mode, AI Overviews, Gemini) and reserves less common models for Enterprise. Both now track Google AI Mode.

About that “Prompt Search Volume” idea

No major chat assistant or LLM vendor provides public, per-prompt query volumes. Without official data, any “prompt search volume” must be a modeled estimate. GEO Metrics uses traditional search volume as a proxy, which to us seems like mixing pears with apples—but it’s a reasonable directional signal if you understand its limitations.

LLM Pulse’s stance: We will not invent demand via opaque statistical models. We focus on auditable measurements—what answers AIs give, who they cite, how often your brand appears, how sentiment trends, and how visibility shifts over time across engines we actively track.

Feature-by-feature: strengths of each

Where GEO Metrics is strong

- Broad engine coverage: Tracks Claude, Copilot, DeepSeek, and Grok on all plans (not just Enterprise).

- AI recommendations: Built-in guidance for improving AI-answer rankings.

- Daily reports: Higher-frequency reporting for teams that want it.

- Enterprise clients: Working with brands like DHL, SeQura, and multiple universities.

Where LLM Pulse is strong

- Price efficiency: Significantly more prompts per € at every tier.

- Data you can audit: Detailed responses with citations and competitor side-by-side views; predictable per-model response caps.

- Brand Sentiment: A robust, explainable signal across prompts/models—helpful for product, PR, and CX teams.

- Query fan out: See how AI models expand your prompts into sub-queries.

- Content Intelligence: AI-powered content optimization recommendations.

- Integrations: Looker Studio connector, API access, GA4 integration, Chrome extension.

- App store tracking: iOS App Store and Google Play visibility (unique to LLM Pulse).

- White label: Agency-ready branded reports.

Public pricing and limits

| Tier | LLM Pulse | GEO Metrics |

|---|---|---|

| Entry | €49/month (40 prompts, 5 models) | €80/month (20 prompts, 7+ models) |

| Mid | €99/month (100 prompts, 5 models) | €245/month (100 prompts, 7+ models) |

| Scale | €299/month (300 prompts, 5 models) | €690/month (300 prompts, 7+ models) |

| Enterprise | Custom (10+ models) | Custom |

Notes: LLM Pulse includes projects, competitor limits per project, and explicit responses/week per model caps. GEO Metrics includes “Access +7 AI engines,” “AI recommendations,” “advanced ranking suggestions,” and “daily visibility reports.” Both offer free trials.

Cost snapshots (illustrative comparisons)

| Prompt volume | LLM Pulse | GEO Metrics | Savings with LLM Pulse |

|---|---|---|---|

| ~20 prompts | €49/month (Starter) | €80/month (Freelance) | €31/month (39%) |

| ~100 prompts | €99/month (Growth) | €245/month (Pro) | €146/month (60%) |

| ~300 prompts | €299/month (Scale) | €690/month (Enterprise) | €391/month (57%) |

Annual savings at 100 prompts: €1,752/year choosing LLM Pulse over GEO Metrics.

The trade-off: If you need Claude, Copilot, DeepSeek, or Grok on non-Enterprise plans, GEO Metrics includes them. If your focus is ChatGPT, Perplexity, Gemini, AI Mode, and AI Overviews—the platforms with the most actual search volume—LLM Pulse delivers those at significantly lower cost.

Additional features comparison

| Feature | LLM Pulse | GEO Metrics |

|---|---|---|

| Brand Sentiment | ✅ Live | — |

| Query fan out | ✅ | — |

| Content Intelligence | ✅ | AI recommendations |

| Looker Studio connector | ✅ | — |

| API access | ✅ (Growth+) | — |

| App store tracking | ✅ | — |

| White label | ✅ | — |

| Chrome extension | ✅ | — |

| Daily reports | On request | ✅ Standard |

| AI ranking suggestions | Content Intelligence | ✅ |

| Prompt search volume | — | ✅ (traditional SV proxy) |

| Free trial | ✅ 14 days | ✅ 14 days |

Who should pick what?

Choose LLM Pulse if…

- You want the 5 most-used AI search platforms (ChatGPT, Perplexity, Gemini, AI Mode, AI Overviews) at the best price.

- You value auditable, accurate measurements over modeled demand and prefer predictable per-model caps.

- You’ll use Brand Sentiment, citations analysis, Query fan out, and competitor views to drive PR/content ops.

- You need integrations like Looker Studio, API, or GA4.

- You’re an agency needing white label reports.

- You have mobile apps and need app store tracking.

- Price efficiency matters—you want to save 50-60% at comparable prompt counts.

Choose GEO Metrics if…

- You need broader LLM coverage right now (Claude, Copilot, DeepSeek, Grok on all plans).

- You want AI ranking suggestions and “prompt search volume” as directional guidance.

- You prefer daily reporting as standard.

- You’re comfortable paying a premium for more AI models on base plans.

What’s changed since September 2025

- GEO Metrics added Google AI Mode — previously they listed AI Overviews but not AI Mode specifically. Both platforms now track AI Mode.

- LLM Pulse added Gemini — now included on all plans alongside ChatGPT, Perplexity, AI Mode, and AI Overviews.

- LLM Pulse shipped Query fan out — see how AI models expand prompts into sub-queries.

- LLM Pulse added Looker Studio connector — build custom dashboards combining AI visibility with other marketing data.

- LLM Pulse expanded Enterprise coverage — Claude, Meta AI, Grok, DeepSeek now available for Enterprise clients.

Final thoughts

Both LLM Pulse and GEO Metrics are serious tools for AI visibility tracking, built by teams who understand the space. The choice comes down to what you value most:

- More AI models on base plans? → GEO Metrics

- Better price-per-prompt and integrations? → LLM Pulse

We’re happy to have GEO Metrics as a fellow Spanish-created solution pushing the category forward. Competition makes everyone better.

Start your free evaluation: Try LLM Pulse free for 14 days.